Best practices for A/B testing

Helpful guidelines based on best practices

- Article

- Customer Experience

A/B testing is a powerful tool for validating the impact of optimisations across a website, app or marketing campaign. In A/B testing, statistics are crucial in determining whether the found results are significant or coincidental.

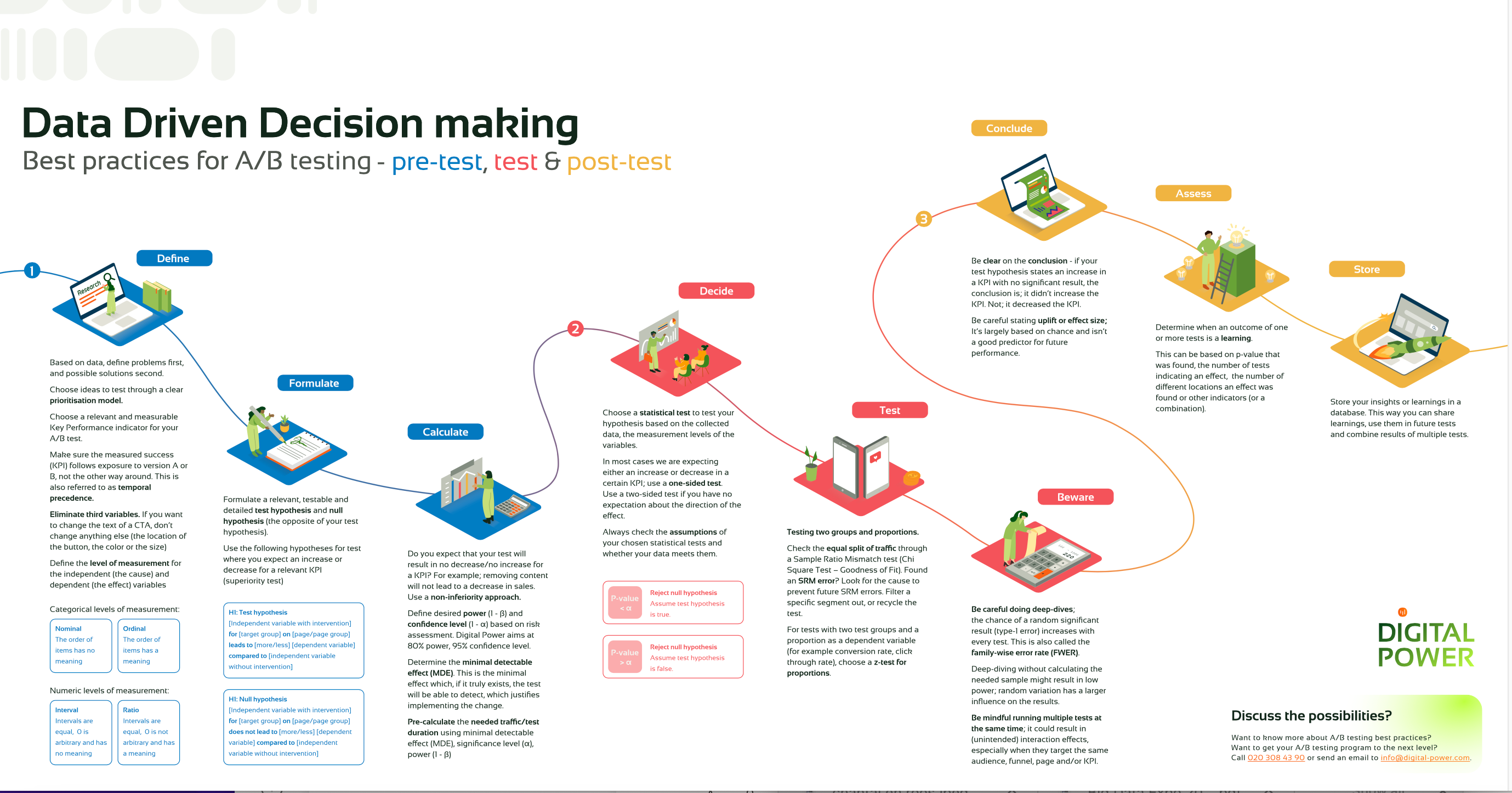

Starting an A/B testing programme can feel overwhelming, and achieving correct insights can feel like a 'mission impossible'. That's why we've made a helpful A/B testing infographic to help you get started immediately through some of Digital Power's best practices. You can use the guidelines if you are just starting with A/B testing, but also if you are more experienced and would like to optimise A/B tests.

In addition to our guidelines, this blog gives you some tools and practical tips to help you make a good start. The tips contribute towards the proper foundation for obtaining valuable results and insights.

3 essential steps before an experiment goes live

Step 1: Define user problems based on data

We often believe we know precisely what users' problems are when interacting with products or websites. However, proper research must be done to get a clear and accurate picture of those problems and their exact scale. Ideally, problems should be derived from several different (data) sources. It is only when you know precisely what the problem you are trying to solve is that you can come up with solutions.

Step 2: Set clear goals and hypotheses

To conduct an A/B test, it is crucial to define the goals beforehand clearly. Tip: this probably concerns the problem you are trying to solve. If you have a clear goal in mind, you can build more effective tests and measure the results as objectively as possible. Moreover, thinking about how you will measure this goal is helpful. Only when you know what data you will analyse and what effect you expect can you build good hypotheses and choose the right statistical test(s).

Step 3: Use a sufficiently large sample size

The sample size should be large enough to get reliable statistical results from A/B testing. This way, the results are minimally influenced by chance, and you increase the probability that they are caused by the independent variable (the change being tested). We created an A/B Test Calculator to help you you determine the correct sample size for testing.

What is important when analysing the results?

Always check the assumptions of the chosen statistical test

Assumptions of statistical tests can be considered the assumptions or requirements for the data you are analysing. Each test has associated assumptions that must be met to run it properly. A typical test for A/B testing is a z-test for proportions, which you use to compare proportions between two equal groups. One of the main requirements for this test is that the two groups you are analysing must be the same size. You can perform a Sample Ratio Mismatch (SRM) test to check this assumption. If the groups are too unequal, this could mean that an external factor influences the distribution of users (for example, the test does not work on a specific device or browser). This can negatively affect the reliability of the A/B test

Be careful with deep dives

It is very tempting to look at many different metrics after your A/B test is complete. With every statistical test you run, there is a chance of finding a statistically significant result, while in practice, there is no effect. This is also known as a type-1 error. Running one test after another dramatically increases the chances of finding something that is not there in reality. Therefore, try to limit the analysis to the KPIs or goals from which decisions are made.

The most important step after running an A/B test

Learn from your experiments

This may sound logical, but genuinely learning from your A/B test is harder than you might think. What made this experiment so successful? Are other departments struggling with the same problems? And on what other funnels or pages could this also work?

After a successful A/B test, it is tempting to make the adjustments and move on to the next test. We recommend saving all experiments in a database to gain valuable insights from multiple performed experiments. After running a few, you can look for similarities or patterns. These are based on similar solutions, psychological tactics or strategies. You can then use these insights to develop relevant and better solutions.

Looking for more best practices for an optimal experimentation programme?

You can use our infographic if you are just starting with A/B testing, but also if you are more experienced and would like to optimise A/B tests.

Get started!

With our tools and guidelines, you can get started with A/B testing! Of course, we can also imagine that these guidelines include a lot of technical and in-depth information. Would you like some assistance with this? Our specialists are more than happy to think along with you!

This is an article by Mila, Customer Experience Specialist bij Digital Power

Mila is a Customer Experience specialist at Digital Power. She has a background in Cognitive Psychology and likes to combine this knowledge about people and behaviour with data to arrive at the best solutions.

Customer Experience Specialistmila.vanderzwaag@digital-power.com

Receive data insights, use cases and behind-the-scenes peeks once a month?

Sign up for our email list and stay 'up to data':